Kim Cascone wrote in his seminal paper, The Aesthetics of Failure, that “the medium is no longer the message in glitch music: the tool has become the message.” He identifies glitch music as emerging “from the ‘failure’ of digital technology . . . bugs, application errors, system crashes, clipping, aliasing, distortion, quantization noise, and even the noise floor of computer sound cards are the raw materials . . ..” He identifies this as “post-digital”[1].

Vanhanen, also writing about the origins of glitch as “the unintentional sounds of a supposedly silent medium.” This is “the result of a two-way relationship between hardware/software and the producer (mis)using it.”[2] Shelly Knotts writes about live-code driven failure as an “inevitability of imperfection.” Error does not generally result from an intentional misuse but is a constant possibility which extends to our entire environment. Live code errors make the audience and practitioners aware of the “imperfection of technical systems” even as these systems surround us and we rely on them, making it potentially a critique of liberal technocracy and capitalism more generally. [3]

However, all of these writers place all of the sound within the machine. Knotts notes that while “a jazz musician [might] suffer a broken string or reed in a performance, its unlikely their entire instrument will collapse.” [3] However George Lewis makes associations between 1980s computer music, which often did involve setting up systems in front of the audience, and (free / jazz) improvisation.

Lewis wrote that the computer music scene of the 1980s in the San Francisco Bay Area “was also widely viewed as providing possibilities for itinerant social formations that could challenge institutional authority and power.” This music was played in a band setting, and formed an improvisational practice, “from a collaborative rather than an instrumental standpoint, negotiating with their machines rather than fully controlling them.” (Unfortunately, this exciting beginning lead to not only to live coding, but also to “ubiquitous computing, which lives on as IoT.)[4]

Lewis himself also experimented with the borders of failure with systems coupled with his trombone. When I was at Sonology in 2005-6, Clarence Barlow described a theatre piece that I believe he attributed to Lewis. Lewis was on stage with his trombone and an effects box, but the effects box was not working. A tech came to assist, then another, then another, until a team of engineers disassembled the entire effects unit. While this was happening, Lewis sat down to eat his dinner. The piece ended when the box was completely disassembled. Unfortunately, I can’t find a reference to this piece, although searching for it did lead me to the writing mentioned above.

Lewis’s piece would more traditionally be classed as theatre rather than glitch. At a stretch, one could claim the effects box is misused. The technicians collaborate on “fixing” the box, and the piece becomes a ritual of debugging, a communal, music-not-making practice. In taking a dinner break, Lewis reflects on how tech outages cause work stoppages. In his piece, the tech “failure” causes the entire piece to “collapse”. His trombone is silent.

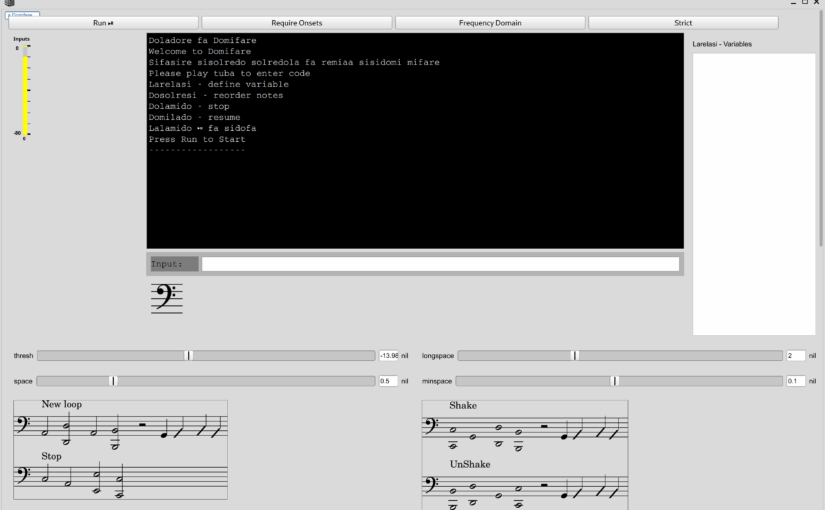

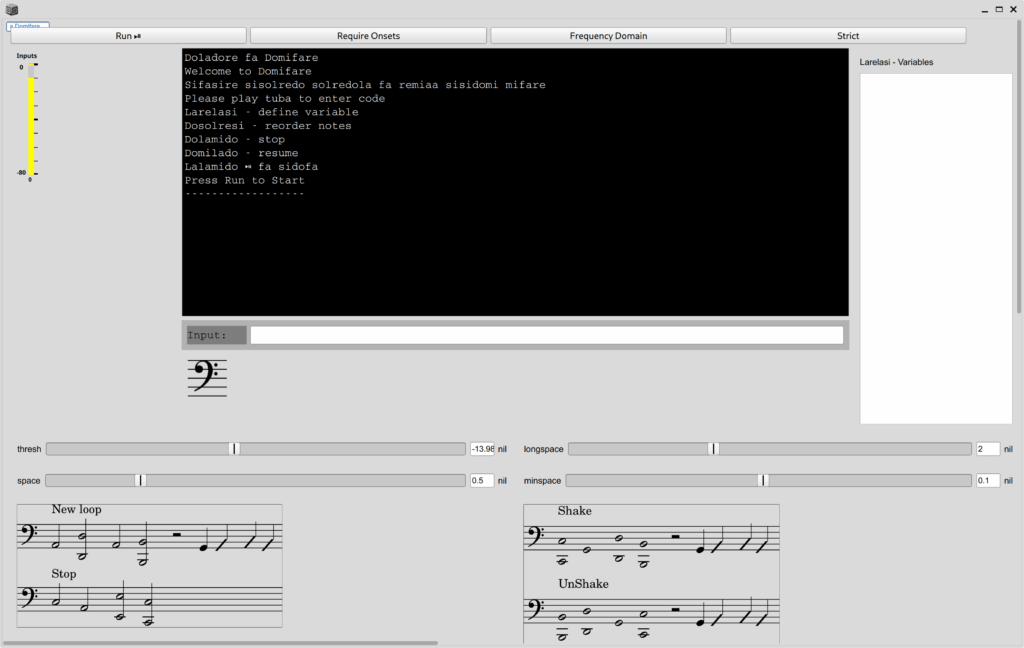

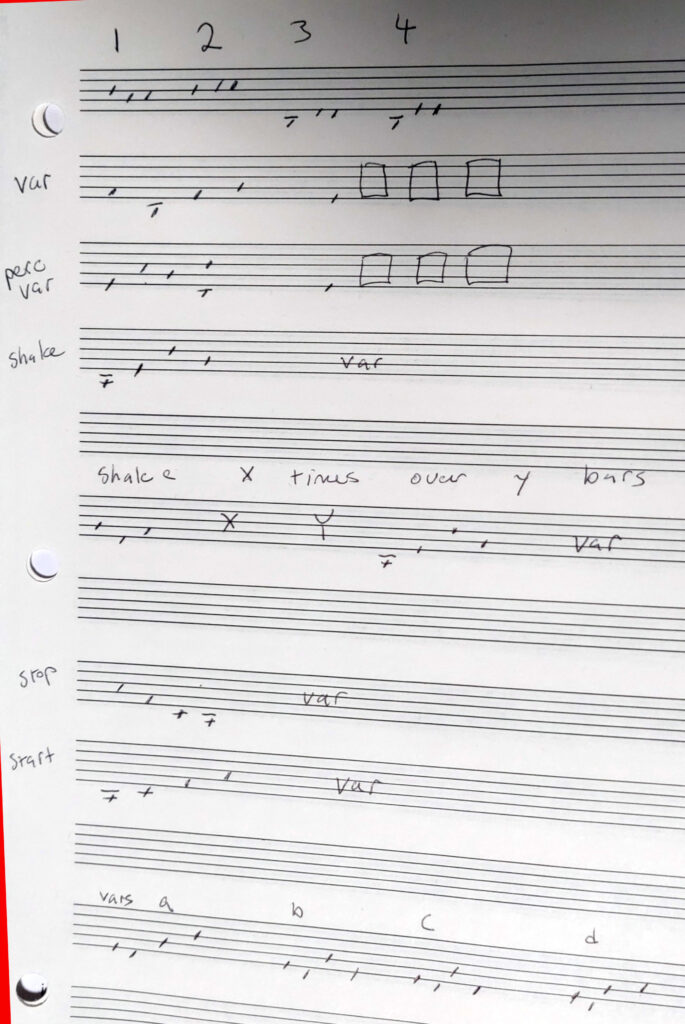

Domifare is also a brass piece that intentionally incorporates technical error. But, unlike the “post-digital” “glitch” pieces of 25 years ago, the errors don’t lie in mangled sound output, but rather in input failure. When the piece functions, it functions around 20% of the time. Sometimes less. On Monday, it was a lot less. Over 15 minutes, not a single command executed.

By placing the instrument as an input to the REPL loop, it queers the acoustic / digital binary and makes total failure audible. If 2000 was post-digital for Cascone, clearly 25 years later, with low bass, we are post again. Indeed, while my computer had no output, the input was constantly present. Although the system uses the logics of live code, especially ixilang, functionally it bears a lot of similarity to responsive systems, such as Voyager by Lewis[5] or Diamond Curtain Wall by Anthony Braxton[6]. Arguably, it’s a simpler system because the results are deterministic – or are when the system works.

Several years ago, I played a free improv set with others at The Luggage Store Gallery in San Francisco. I brought my laptop and my tuba, with the intention to switch between them part way through the set. As we started, my computer would only make static. Something was wildly wrong and after a few minutes of trying to fix it, I switched to tuba for the remainder of the set. Speaking to others afterwards, Matt Davignon said that he thought the static had been on purpose, and he thought I was “one of those computer musicians.” My old double bass teacher, Damon Smith, put it in a positive, enthusiastic light. “It doesn’t matter, because you have a tuba!” He went on “all computer musicians should have tubas with them!”

When I announced I was giving up on Domifare, Evan Rascob, echoing Smith, called out that I should have just played tuba for a few minutes. I should have.

Although it directly contradicts the TopLap Manifesto regarding backups [7], perhaps Smith is right. All computer musicians should have tubas.

Works Cited

[1] K. Cascone, ‘The Aesthetics of Failure: “Post-Digital” Tendencies in Contemporary Computer Music’, Comput. Music J., vol. 24, no. 4, pp. 12–18, Dec. 2000, doi: 10.1162/014892600559489.

[2] J. Vanhanen, ‘Virtual Sound: Examining Glitch and Production’, Contemp. Music Rev., vol. 22, no. 4, pp. 45–52, Dec. 2003, doi: 10.1080/0749446032000156946.

[3] S. Knotts, A. Hamilton, and L. Pearson, ‘Live coding and failure’, Aesthet. Imperfection Music Arts Spontaneity Flaws Unfinished, pp. 189–201, 2020.

[4] G. Lewis, ‘From Network Bands to Ubiquitous Computing: Rich Gold and the Social Aesthetics of Interactivity’, in Improvisation and social aesthetics, G. Born, E. Lewis, and W. Straw, Eds., in Improvisation, community, and social practice. , Durham: Duke University Press, 2017, pp. 91–109.

[5] G. Lewis, Voyager. 1985.

[6] A. Braxton, Diamond Curtain Wall. 2005.

[7] ‘ManifestoDraft – Toplap’. Accessed: Jul. 16, 2025. [Online]. Available: https://toplap.org/wiki/ManifestoDraft