Talk by Louise Brown.

BCS is the British Computer Science professional body, representing members in the IT profession. A learned society with a royal charter and can charter people and offer qualifications and is a charity. It wishes to be the gold standard for diversity in the IT sector.

15% of the 70k members are women. There are more than 50 specialist groups, one of which is BCS Women (and another is the Open Source Specialist Group).

BCS Women is volunteer-driven group. They aim to support members and raise awareness of women in IT. trying to get more women into IT. They want to put up posters in schools around women in IT. They do Android Programming ‘family fun days’ – a one day workshop, aimed at families, that lets people try coding.

They run a Lovelace Colloquium for undergraduate women. And did Open Source taster days that they ran jointly with the Open source Group and Fossbox. They did AppInventor, intro to git and intro to python.

FLOSS-UK in the spring has a call for papers.

the BCS is also having an AGM in a few days with a foss event.

www.bcs.org/bcswomen

www.bcs.org/category/17484

Author: Charles Céleste Hutchins

live blogging flossie: a tv collaboration in barcellona

They use pure data both in software, but also with paper objects and string stuck to walls

Free software changes your relation with your computer and by extension your relationship with a lot of technologies in your daily life.

The collective uses linux. Free software freed them from the concept of ‘good’ and ‘bad’ uses. They found new uses. Their technical experience is considered less valid, but people call on them to discuss politics. This frees them to try to do whatever they want.

they made a ‘possible impossible machine.’ the first version had a terrible interface. it was made collectively by a workshop by all the participants. they had a pd patch to make all decisions. this was absurd. this is not the best way to handle negotiations.

some uses to technology have a tendency to erase the human touch.

they use old tech to resist planned obsolescence.

Gender construction: they don’t know how it works, but they know how to use it. Women there have worked to lose fear of opening the closed boxes. Open source has helped enable this.

she has talked a lot about their process around ‘errors’. failure is part of free software culture – to recognise bugs and not hide problems. there can also be a problem when people ‘celebrate’ the power of a mistake (ie the aesthetics of glitch) in that foss guys sometimes get upset about it. the speaker feels it’s a part of feminism to give less power to the ‘expert’ but to work with people who are experimenting, learning and making interesting material. Her political position is against the specialist.

minipimer.tv

live blogging flossie: screens in the wild

twitter: @wildscreens

Digital media embedded in environment. Mediated urban space. Most common uses of big screens is commercial.

How to do something different with screens and allow people access instead of letting advertisers dictate to us?

there are some big BBC screens in the centre of some communities. 23(?) in the UK? These are supposed to regenerate communities. How will this work? Nobody knows, but we want a big screen. They are high up and it’s hard to do interactive content.

the research challenge is how to integrate the screens into the environment in a way that the community has input.

How does the public engage with them? How can they be used to interact? How can we enable open access?

Active research and iterative design process.

they did community workshops.

they came up with something like a photo booth. easy to use. people do a pose and others in remote locations copy those poses to create an interaction.

Open access is very difficult, especially things that run a long time. The technology is also expensive, which makes it hard to get access.

www.screensinthewild.org

Live blogging flossie: Pulse Project- touching as listening

(Last year, Flossie was women-only and a bunch of men complained. This year, they bowed to pressure and decided to let anyone attend. I’m the only boy in the room.)

Talk by Michelle Lewis-King, an American who uses SuperCollider(!).

This is based on pulse-reading and some Chinese medicine principles. She has an acupuncture degree.

Occidental medicine is based on cutting apart dead bodies. Whereas Chinese medicine is more ‘alchemistic’, she says. Western medicine is from looking at dead bodies. Chinese medicine is based on feeling living bodies.

Pulses are abstractly linked to a type of music of the spheres.

She started drawing people’s pulses at different depths. This is sonic portraiture.

She found the SuperCollider community to be problematic to ask questions due to differences in ‘architecture’ differences. Some of the tutorials are not easy. She says the book is great because of the diversity of approaches. People at conferences have criticised her code, which is not a fun experience.

The community also provides a lot of support. The programme is free, community oriented and a useful tool.

She’s playing one of her compositions. It’s a pulsing very synthesised sound.

More info: journal.sonicstudies.org/vol04/nr01/a12 4th issue of the Journal of Sonic Studies

Twitter @vergevirtual

Vegan Apple Cake

- 225g (1¾ US Cups) self-raising flour (or 220g (still 1¾ US Cups) plain white flour + 1 tsp baking powder)

- ¼ tsp bicarbonate of soda

- 1 tsp baking powder

- A pinch of sea salt

- Optional: A pinch of ground cardamom seeds, a grating of fresh ginger (or use ½ tsp ground), ½ tsp cinnamon, ½ tsp nutmeg

- 450g (1 lbs) cooking or dessert apples

- A little lemon juice

- 100g (½ US Cup) sugar

- 125 mL (½ US Cup) olive oil

- 1 mooshed bananna

- 50g (¼ US Cup) soft brown or demerara sugar (or caster sugar is fine)

The spices are optional and you can use more or less to your taste. Once you’ve gathered your ingredients:

- Sift flour, bicarb of soda, salt and spices (if using) into a mixing bowl. Mix thoroughly.

- Peel the apples. Cut into very small, think pieces. Toss the cut apples in a little lemon juice as you go, to keep them from browning.

- Mix in apples, sugar, oil and banana into the flour mix. Gently fold through until everything is thoroughly mixed. Turn into a greased cake tin.

- Level off the top of the batter. Sprinkle with sugar.

- Bake in a preheated moderately hot oven (200°C/Gas 6/375°F) for 30-40 mins. Test with skewer.

- Remove from oven. Allow to shrink slightly before turning out onto serving plate.

Variations

If you want a slightly heavier, richer cake, you can substitute 100g (½ US Cup) margarine + 1-2 Tbsp soy milk for the olive oil. Add margarine just after sifting the flour and spices together, cut it into the flour mixture and and rub into breadcrumb consistency. Then add the soy milk just after adding the apples, sugar etc. Add enough to wet the batter and hold it together.

Julie Bindel

There’s been a new round in the ongoing Julie Bindel vs trans people row. She was scheduled to speak against pornography at freshers week at Manchester, but pulled out because of rape and death threats, which were scary enough that she reported them to the police. I want to be very clear that rape and death threats are wrong. I hope the police catch whoever did it. Obviously, I don’t know if the person who did it is trans or a misguided ‘ally’ or is somebody who is really insecure about feminist discourse on pron. There have been a lot of easily findable abusive tweets from trans people to her, but none I saw were threatening.

There is a lot of confusion in the media and from Ms Bindel herself about why trans people are so very displeased with her. She imagines that it was an extremely rude and insulting article she wrote several years ago, which I won’t bother to quote. Some media has reported that it’s a philosophical disagreement about the origins or cultural significance of trans identities. Both of these things are wrong.

Trans people protest Bindel because every time she has the opportunity to speak about us, she advocates against us receiving appropriate healthcare. She wants to uphold our freedom of how we identify and that’s nice and all, but a lack of appropriate healthcare would be a tremendous crisis. the NHS started covering transition for the same reason that San Francisco Department of Health does: it’s much cheaper to do it than not do it. If there is no legal, prescription access to hormones, this will not stop people from taking them. It will however, prevent them from being appropriately monitored. Unmonitored hormones usage is not particularly safe and cause problems from blood clots to cancer as people get their levels wrong. In the NHS’s case, they found they were treating trans women who had, in desperation, attempted or succeeded in castrating themselves – dealing with the shock, the blood loss, infection and everything else that goes wrong when people try to perform surgery on themselves. In San Francisco, they found that lack of access to hormones lead to the impossibility of passing, which meant that some poor trans women were extremely vulnerable and whose only economic opportunities were in survival street sex work. Their HIV rates were around 70%.

Lack of access to healthcare kills. When she uses her very public platform to prevent us getting treatment, she is advocating that some us die.

In the past, she’s written about trans regret. This is when somebody transitions and then changes their mind. To a cis person, this must seem like the worst thing ever. In reality, the regret rate is around 1%. This is lower than for most medical interventions, including for cancer. For the 99% of people who are happy, they go on to lead productive lives, pay taxes, etc. Trans people tend to be much more economically produce post-transition, to the point where the medical intervention pays for itself in increased tax revenue.

There is a law in the UK called the Gender Recognition Act which prevents discrimination against (many) trans people and provides a mechanism by which people can update their documentation. When this was a bill, she and the Guardian actively campaigned against it, by finding every regretter they could and misrepresenting studies to claim the regret rate is much higher than it actually is.

These days, there are a few trans voices that get columns when issues about us come up, but for a long time the only people writing about us were cis people. Bindel became a public ‘expert’ on us because she already had a column – because she was cis. She’s still more famous than our rising star columnists and she still gets platforms over and over again where she proclaims that her ideology requires us to die. That the diagnosis of gender dysphoria should be eliminated and we be left to our self-identification without any medical support.

I wish that people would stop sending her abusive tweets. I wish people would stop offering her platforms to speak. I think that advocating for the removal of healthcare from a vulnerable population should disqualify one form speaking on any human rights issue.

Scrambling and Rotating

I’m still doing stuff with the Bravura font, so you’re going to have to follow a link to see it.

New things on that page include changing font size per item, rotating an individual object, constraining random numbers and shuffling an array. The last of which is not included in javascript. I cut and pasted a function from stack overflow.

Right now I’m using random numbers, but the end version needs to work deterministically in response to data and probably everyone’s screens should more or less match. So the randomness is for now and not later.

I need to make some functions for drawing arrows and staves. And I need to figure out how the bounding boxes will work and how things will fade in and out. Every kind of glyph or drawn thing on the screen needs to be an object that takes parameters in regards to size and rotation and knows how to draw itself.

In short, it’s starting to look like music, but there’s a long way to go.

Friday’s Post

I found a really very handy PDF document showing every single available music glyph in the Bravura font, organised by type. Gaze upon it and be amazed by how much notation you don’t know and how very very many types of accidentals there are. I really like the treble clef whose lower stroke ends in a down arrow. I don’t know how I missed it earlier. This is truly the best font ever. I also has letters and numbers and stuff.

I’ve got an example of using Bravura in a canvas. You have to go elsewhere to see it, because it’s downloading the font via @font-face in css and I can’t do that from this blog.

If you look at it, you should see an upside down treble clef. This is from the Bravura font. If you don’t see the treble clef, please leave a comment with what browser you’re using (ex: Internet Explorer), the version number (ex: 4.05), the sort of device (ex: Desktop, laptop, tablet, iphone, etc) and the operating system.

I got that glyph into the canvas by typing the unicode into the string in my text editor. The editor is using a different font, so this is kind of annoying because looking at the text source doesn’t show the result or even the unicode number.

This is an example of an image in a canvas with some text:

Your browser does not support the HTML5 canvas tag.

var c=document.getElementById(“canvas_images”);

var context=c.getContext(“2d”);

var numGlyphs = Math.floor((Math.random()*3)+1) + 1;

var blob = new Image();

var x, y;

context.font = “150% Bravura”;

blob.src = “https://farm8.staticflickr.com/7322/9736783860_4c2706d4ef_m.jpg”

blob.onload = function() {

context.drawImage(blob, 0, 0);

for (var i = 0; i < numGlyphs; i++) {

x = Math.floor((Math.random()*i*50) + 1) +5;

y = Math.floor((Math.random()* 205) + 1) +7;

//1f49b

context.fillText("

Drawing

Today I went to the dentist because my dental surgery from last week was still all swollen and that turns out to not be a good sign. So I picked up some antibiotics and then went to the Goldsmiths degree show, which was interesting and extremely variable. I did not get a lot of creative pacting done today. I read a wee bit about how to declare objects in javascript, which is really easy:

var cleff = {

unicode: "&x#1d11e";

repeats: 1

};

What I really need is just a syntax cheat sheet, since I’m already familiar with most of the concepts of this language. So I skipped ahead to canvases.

Your browser does not support the HTML5 canvas tag.

var c=document.getElementById(“myCanvas”);

var ctx=c.getContext(“2d”);

ctx.fillStyle=”#FF0000″;

ctx.fillRect(0,0,200,100);

ctx.fillStyle=”#000000″;

ctx.moveTo(0,0);

ctx.lineTo(200,100);

ctx.stroke();

ctx.fillStyle=”#0000FF”;

ctx.moveTo(0, 32);

ctx.lineTo(200, 32);

ctx.stroke();

ctx.fillText(“unicode?”,32,32)

What the source for that looks like is:

<canvas id="myCanvas" width="200" height="100" style="border:1px solid #d3d3d3;">

Your browser does not support the HTML5 canvas tag.</canvas>

<script>

var c=document.getElementById("myCanvas");

var ctx=c.getContext("2d");

ctx.fillStyle="#FF0000";

ctx.fillRect(0,0,200,100);

ctx.fillStyle="#000000";

ctx.moveTo(0,0);

ctx.lineTo(200,100);

ctx.stroke();

ctx.fillStyle="#0000FF";

ctx.moveTo(0, 32);

ctx.lineTo(200, 32);

ctx.stroke();

ctx.fillText("unicode?",32,32)

</script>

I need to learn how to get unicode into those strings. Just typing it in with the ctrl-shift-u thing doesn’t work, nor does typing the html escape sequence. Also, it seems like changing the line colour didn’t work.

Chosen Symbols

I picked which symbols I’m going to use. (I have to link to them rather than post them on this page because they use a bundled font, and some web browsers want that font to come from the same server as the text. I can’t upload the font to blogspot, as far as I know.)

A couple of iPad users let me know they can see the upside-down treble clef, so I know that this font thing works on that device, so this is excellent news.

The symbols are sort-of grouped into noteheads, rests, lines, clefs, fermatas, circles, triangles, diamonds, and groovy percussion symbols. There are some cases in which different unicode chars seem to map to identical symbols. Some of the symbols, like the bars, don’t makse sense by themselves, but need to have several grouped together. There’s going to need to be some logic in how symbols are chosen by the program and used. Strings might be pre-defined, so regexes might indicate repetitions of a glyph.

While going through some papers, I found my first notes from when I first thought of this piece.

.flickr-photo { border: solid 2px #000000; }.flickr-yourcomment { }.flickr-frame { text-align: left; padding: 3px; }.flickr-caption { font-size: 0.8em; margin-top: 0px; }

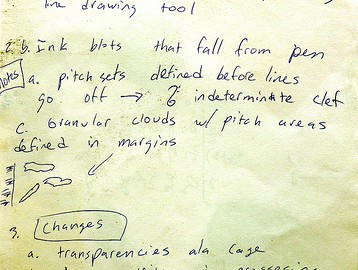

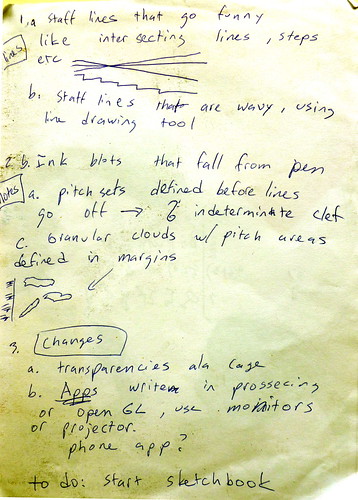

This piece started as a pen and paper idea. The ideas were:

Lines

- staff lines that go funny, like intersecting lines, steps, etc.

- wavy lines using a line drawing tool.

Notes

- pitch sets definied before the lines go off –> indeterminate clefs

- Ink blots that fall from an ink pen

- granular clouds w/ pitch areas defined in margins

Changes

- Transparencies, ala Cage

- Apps written in processing or Open GL, use monitors or projector.

- phone app?

The staff lines that go off is a good idea. They could start straight and then curve or just always be intersecting. This can’t use the staff notation in the font, but will need to be a set of rules on how to draw lines.

Using indeterminate clefs is not as good an idea – it just means there are two possible versions of every note, which just builds chords.

By the end of making that outline, it was no longer a pen and paper piece!

Granular clouds are not well suited to vocal ensembles, but this idea did become another piece.

Cloud Drawings from Charles Céleste Hutchins on Vimeo.