I’ve just shipped off the first commission! I can’t say much about it yet, as it’s surprise birthday present for someone, but I’ll post a copy to my podcast shortly after their birthday arrives.

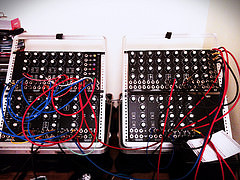

I learned a bit while making it, about how to better use a module I’ve had for a long time. Also, I think I set a personal record for most cables used in a single patch! It doesn’t look like much in the picture, but only because the black cables, are hard to see against the black synth and they outnumber the other colours. Details of what I learned and what all those cables were doing will go into the programme notes when I post the audio later.

I’m already hard at work on the next one, which I was making while updating on comet news. Probably all the sounds are recorded, so I’m just mixing it now. I thought it would be a good idea to do some more manual-sounding fades and pans, so I’m using some physical faders. The online documentation was a little bit spotty on how to get this work, so I’ll put some instructions at the bottom of this post.

Noise music commissions make great gifts, for birthdays, the holidays or just as a surprise! They are delivered not only as audio, but with a story of how it was made – including influences and even some instructions on how to recreate the sounds! This especially fun for people interested in music or sound design! If you order in November, delivery is guaranteed in time for Hanukkah or Christmas!

Using midifaders with Ardour on Linux

These instructions are for a NanoKontrol2, but will work for many other popular faders.

- The Ardour manual starts out very well, so follow it’s instructions to get going.

- Start Ardour.

- Go to qjackctl and click the connect button.

- Expand all to find the NanoKontrol2 in the list on the left.

- Draw a connection between the NanoKontrol2 on the left and Ardour on the right. (You will need to make this connection every time you start Jack and Ardour.)

- Go to Ardour and open preferences.

- Go to the Control Surfaces tab.

- Enable ‘Generic MIDI’.

- Then double click generic MIDI.

- A window will open listing a bunch of control surfaces.

- NanoKontrol2 is in the list twice. The one that mentions ‘Master’ maps the 8th fader to the master faders and has seven channels for tracks. The other one uses all faders for tracks.

- NanoKontrol2 does not support touch, so you will be able to use this on tracks when they are set to ‘Write’ or ‘Manual’, but not when set to ‘Play or ‘Touch’.

- Record enable will not behave correctly unless you run the NanoKontrol2 configuration software in WINE

Do you love noise music? Do you have fashion? Drop me an email if you’d like your image to be in forthcoming posts about noise and fashion

A recycled synth I saw on Sunday.

A recycled synth I saw on Sunday.

![[Puppy. Click through for album of puppy images]](http://www.celesteh.com/blog/wp-content/uploads/2014/11/15671223591_80b3e1e7cc_z.jpg)